CogCoM: Train Large Vision-Language Models Diving into Details through Chain of Manipulations

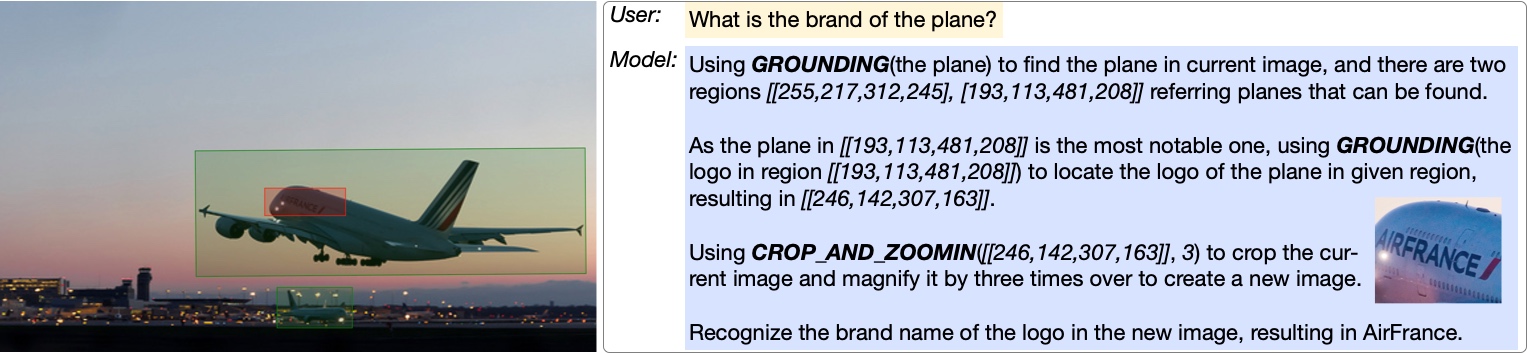

Vision-Language Models (VLMs) have demonstrated their broad effectiveness thanks to extensive training in aligning visual instructions to responses. However, such training of conclusive alignment leads models to ignore essential visual reasoning, further resulting in failures in meticulous visual problems and unfaithful responses. Drawing inspiration from human cognition in solving visual problems (e.g., marking, zoom in), this paper introduces Chain of Manipulations, a mechanism that enables VLMs to solve problems step-by-step with evidence. After training, models can solve various visual problems by eliciting intrinsic manipulations (e.g., grounding, zoom in) with results (e.g., boxes, image) actively without involving external tools, while also allowing users to trace error causes. We study the roadmap to implement this mechanism, including (1) a flexible design of manipulations upon extensive analysis, (2) an efficient automated data generation pipeline, (3) a compatible VLM architecture capable of multi-turn multi-image, and (4) a model training process for versatile capabilities. With the design, we also manually annotate 6K high-quality samples for the challenging graphical mathematical problems. Our trained model, \textbf{CogCoM}, equipped with this mechanism with 17B parameters achieves state-of-the-art performance across 9 benchmarks from 4 categories, demonstrating the effectiveness while preserving the interpretability. Our code, model weights, and collected data are publicly available at https://github.com/THUDM/CogCoM.

PDF Abstract

MS COCO

MS COCO

Visual Question Answering

Visual Question Answering

GQA

GQA

TextVQA

TextVQA

MM-Vet

MM-Vet

ST-VQA

ST-VQA